Governments around the world are grappling with how best to control the increasingly unruly beast of artificial intelligence (AI).

This fast-growing technology promises to boost economies and make simple tasks easier to complete, but it also poses serious risks, such as AI-enabled crime and fraud, an increased spread of misinformation and disinformation, increased public surveillance, and further discrimination against already disadvantaged groups.

The European Union is taking a leading role worldwide in tackling these risks. In recent weeks, the law on artificial intelligence has come into force.

This is the first law internationally aimed at comprehensively managing AI risks, and there is much to be learned from it for Australia and other countries as they too seek to ensure that AI is safe and beneficial for all.

AI: a double-edged sword

AI is already widespread in human society. It forms the basis of the algorithms that recommend music, films and TV shows on applications such as Spotify or Netflix. It is in cameras that identify people in airports and shopping malls. And it is increasingly used in recruitment, education and healthcare.

But AI is also being used for more disturbing purposes. It can create deepfakes of images and videos, facilitate online fraud, promote mass surveillance, and violate our privacy and human rights.

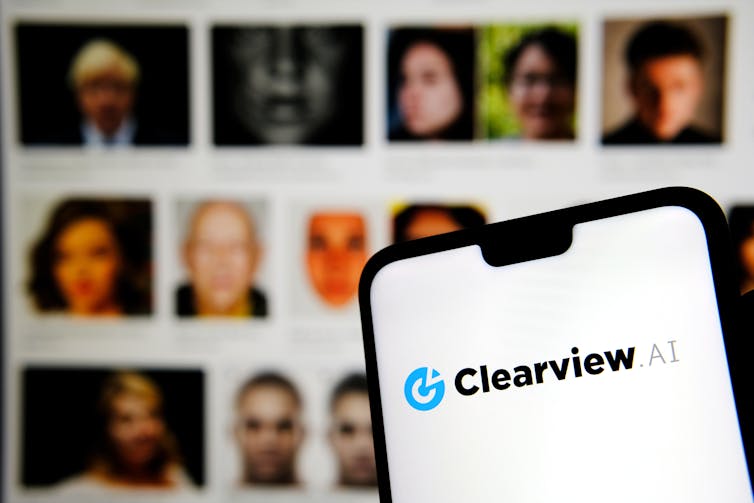

For example, in November 2021, Australia’s Privacy Commissioner Angelene Falk ruled that a facial recognition tool called Clearview AI violated privacy laws by collecting photos of people from social media sites for training purposes. However, an investigation by Crikey earlier this year found that the company was still collecting photos of Australians for its AI database.

Cases like this underscore the urgent need for better regulation of AI technologies. In fact, AI developers are even calling for laws to mitigate AI risks.

Ascanio/Shutterstock

The EU legislation on artificial intelligence

The European Union’s new AI law came into force on August 1.

Crucially, the requirements for different AI systems are set based on the level of risk they pose. The more risks an AI system poses to people’s health, safety or human rights, the more stringent the requirements it must meet.

The law includes a list of prohibited high-risk systems. This list includes AI systems that use subliminal techniques to manipulate individual decisions. It also includes unrestricted and realistic facial recognition systems used by law enforcement agencies, similar to those currently deployed in China.

Other AI systems, such as those used by authorities or in education and healthcare, are also considered risky. Although they are not banned, they must meet many requirements.

For example, these systems must have their own risk management plan, be trained on high-quality data, meet accuracy, robustness and cybersecurity requirements, and provide a certain level of human oversight.

Lower-risk AI systems, such as various chatbots, only need to meet certain transparency requirements. For example, users must be informed that they are interacting with an AI bot and not a real person. AI-generated images and text must also include a statement that they were generated by the AI and not by a human.

Certain EU and national authorities will monitor whether AI systems deployed on the EU market meet these requirements and impose fines in case of non-compliance.

Other countries follow suit

The EU is not the only one taking measures to curb the AI revolution.

Earlier this year, the Council of Europe, an international human rights organization with 46 member states, adopted the first international treaty requiring AI to respect human rights, democracy and the rule of law.

Canada is also currently discussing the AI and Data Bill. Like EU laws, it regulates different AI systems depending on the risk.

Instead of a single law, the U.S. government recently proposed a series of different laws that address different AI systems in different sectors.

Australia can learn – and lead

There is great concern about AI in Australia and steps are being taken to impose the necessary safeguards on the new technology.

Last year, the Australian Federal Government conducted a public consultation on safe and responsible AI and subsequently established an AI Expert Group, which is currently working on an initial draft of AI legislation.

The government is also planning legislative reforms to address the challenges of AI in healthcare, consumer protection and the creative industries.

The risk-based approach to AI regulation used by the EU and other countries is a good starting point when thinking about regulating different AI technologies.

However, a single AI law will never be able to address the complexity of the technology in specific industries. For example, the use of AI in healthcare will raise complex ethical and legal questions that will need to be addressed in specific healthcare laws. A general AI law will not be enough.

Regulating different AI applications across different sectors is no easy task, and there is still a long way to go before comprehensive and enforceable laws are in place in all jurisdictions. Policymakers must join forces with industry and communities across Australia to ensure AI delivers the promised benefits to Australian society – without the associated harms.